Evaluator’s note on the course and the project

Many activities of the project, including the work package 4 of which the course is a part of, were postponed in their start and implementation.[1] The COVID-19 pandemics complicated preparation and implementation of the entire project. However, as it can be seen in the interim reports 3 and, all the team meetings took place online and the team met more frequently. The obstacles were overcome and the course Towards Better Employment Projects took place successfully. From the available feedback of the participants it is obvious that the project team used any additional time for preparation of a thorough learning tool, furthermore well-tailored to the uneasy conditions we are still living in. The course participants praised the toolkit, e-learning and workshops and explicitly stressed how well prepared it was despitethe online form. From the available information, the postponements don’t affect the ability of the project team to deliver the expected outputs and to reach the outcomes in planned schedule and in planned quality. However, for responsible evaluation of the project it would be valuable to have enough time for data collection and analysis after the support of the target groups takes place. It takes time until the members of the target groups get a chance to use the newly acquired knowledge and skills in practice. It takes time until their effort bears fruit. The stories of change are collected 6 months after the completion of the course (or other kind of support) and this is the minimum time required for evaluation after the last support takes place.

Self-rated development and knowledge growth

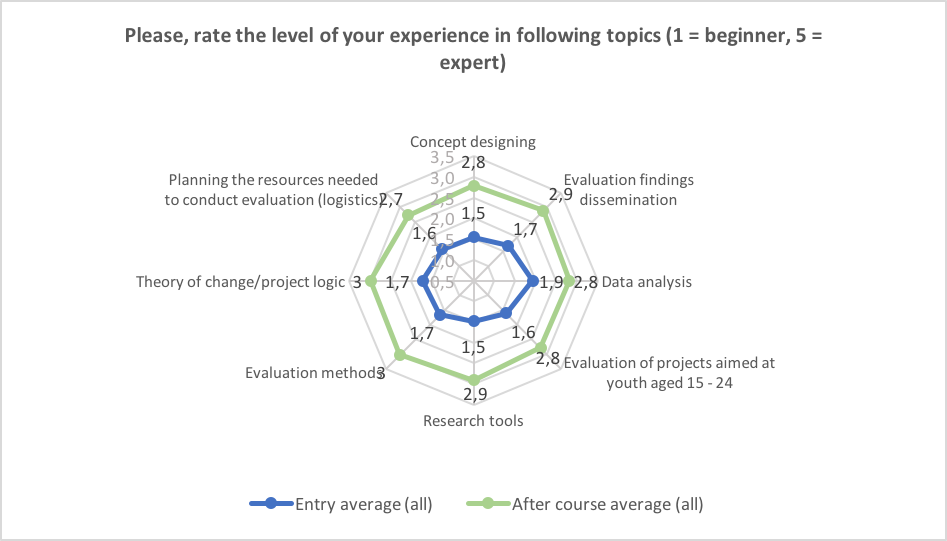

The participants of the course “Towards Better Youth Employment Projects” took part in a survey on their level of experience in various evaluation areas, personal and organizational attitudes towards evaluation, and expectations of the course. The questionnaire was administered to the participants before the course (1 – 30 March 2021) and after the course (27. April – 26. May 2021). The ex-post questionnaire contained additional questions concerning their satisfaction with the course. In the entrance survey, 78 participants responded. In the ex-post survey, 48 participants responded. In total, there were 43 respondents in whose cases it was possible to match their full initial and final answers in both entrance and ex-post surveys.

First, participants were asked to assess their proficiency in various areas of evaluation. Before the course, most of the participants identified themselves as beginners (1 or 2 out of 5) in the majority of the areas. After the course, their self-assessment rose on average by at least one level in most cases (just in data analysis the difference before and after was only 0,9).

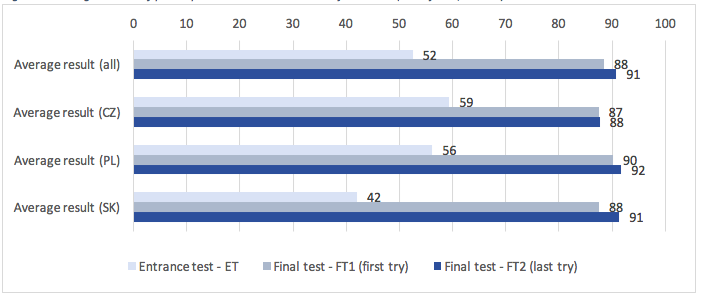

The subjective self-assessment growth is supported by a more objective measurement. In the e-learning environment, the participants were obliged to take the entry and final test. The entrance test measured their initial knowledge in various areas reflecting particular modules of the course. Passing the final test (at least 60 % out of 100 points) was also a condition for receiving a certificate. Since the test at the beginning of the course and the one at the end of the course were identical, the difference between the results of the entrance test and the final test reflects the knowledge growth of the participants. While before the course only one third of the participants reached at least 60 % (overall 52 % on average), at the end of the course just two participants didn’t reach the 60 % threshold (and in both cases they didn’t even use all their tries). And on average, participants scored 91 % in the final test.

Change in attitudes and personal capacities

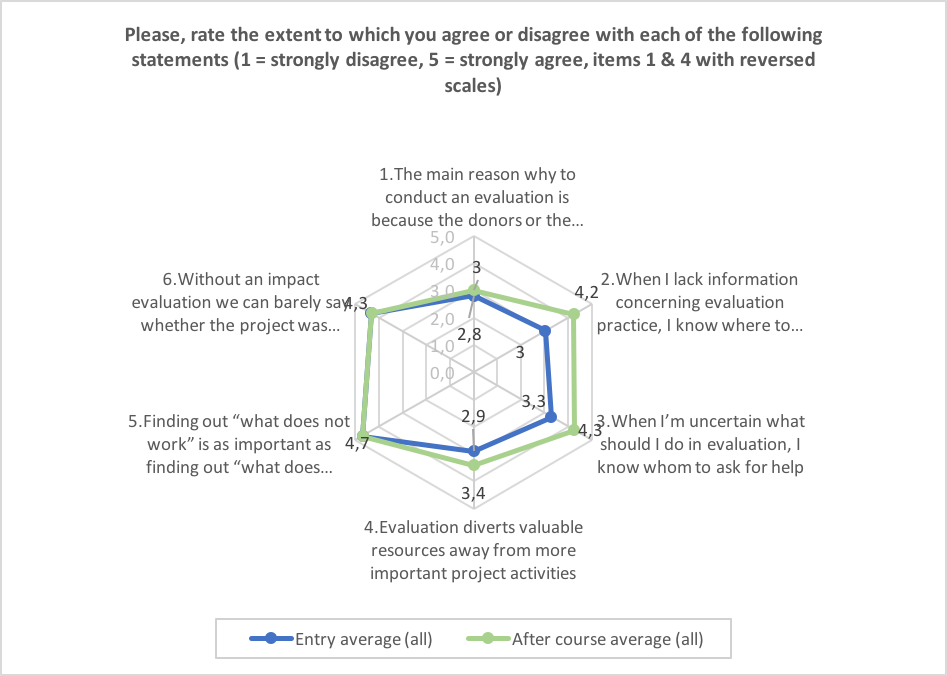

In the initial and ex-post survey, participants of the course were asked to what extent they agree with several statements concerning their attitudes towards evaluation and their personal capacities. The statements connected to the importance of evaluation scored high even in the initial survey and no change in these attitudes was recorded. There was no necessity to convince the convinced. According to the theory of the change of the project, increasing the capacity to successfully conduct evaluation depends not only on personal knowledge, skills and attitudes but also on the personal networks of these evaluators. Courses are not only places of acquiring new knowledge and training new skills but of meeting new people that can help us in the future as well. The results of the survey suggest that after the completion of the course, the participants are now more certain in where to seek missing information on evaluation or whom to ask for help. Whether participants truly utilized their newly acquired knowledge, skills and enhanced social networks, we’re going to learn through the interviews with the participants scheduled in September 2021 (6 months after completion of the course).

Change in the self-assessed organizational capacity

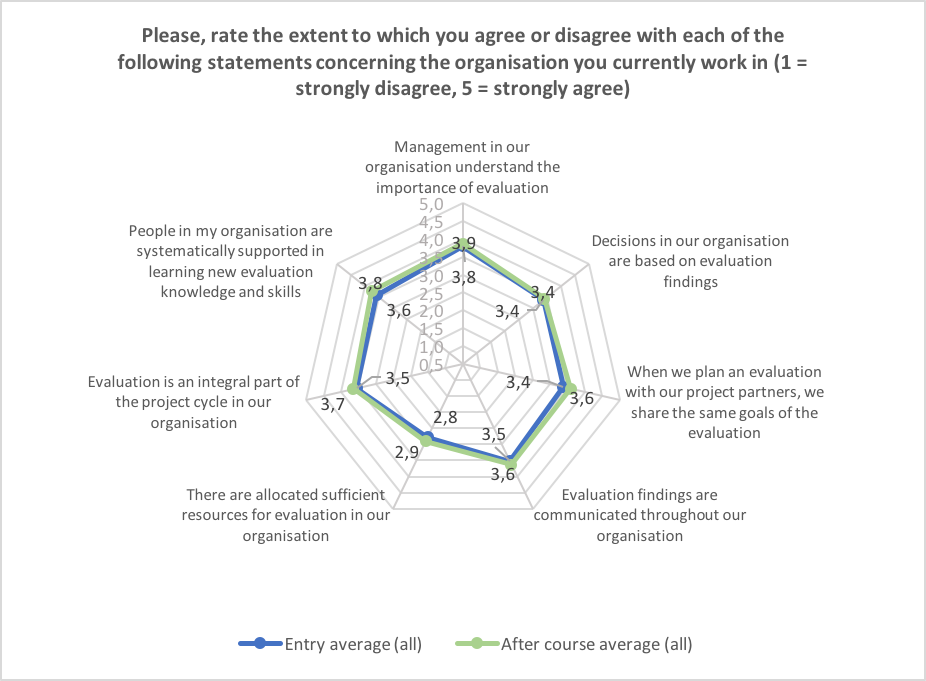

Another aspect of the capacity building is enhancing the evaluation capacity of the organizations the course participants work for. Internal procedures, organizational attitudes and networks For this reason, the participants were asked about the attitudes of their organizations towards evaluation in the survey before and after the course. However, it can’t be expected that a short course for a few participants, often just one person representing an organization, could change an entire organization so fast. The purpose was rather to create positive cores in these organizations on which the change could accumulate like a snowball. In the comparison of the answers before and after the course there is a visible consistent difference yet too small to be considered a major shift. The success stories can appear in a longer period of time. And for this purpose, to collect these stories of change, interviews with the participants are planned in September 2021 (6 months after completion of the course).

Feedback on the course and the toolkit

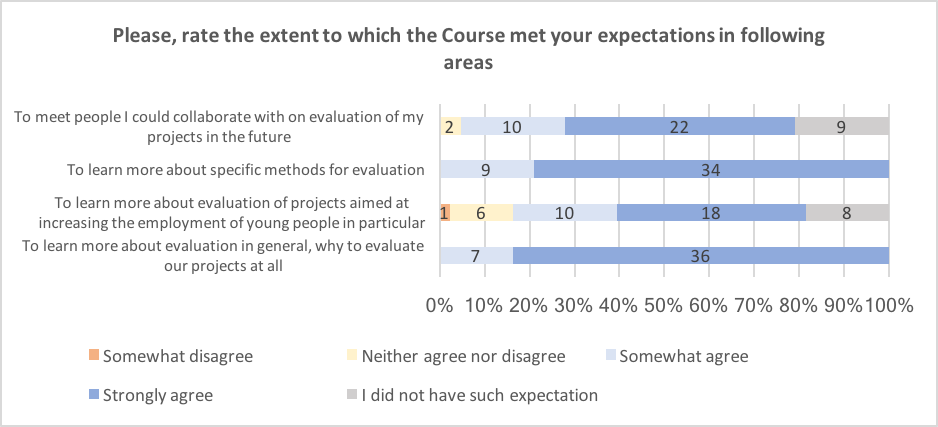

In the survey after the course, the participants were asked whether the course met their expectations in various areas. It definitely met their expectations in learning about evaluation in general and about specific evaluation methods in particular. The participants were a bit more reserved in their evaluation of whether the course helped them to learn about evaluation of projects aimed at increasing the employment of young people. Part of the participants had no such expectation and a similar share of participants didn’t expect to meet on the course people they could collaborate with on evaluation of their projects in the future.

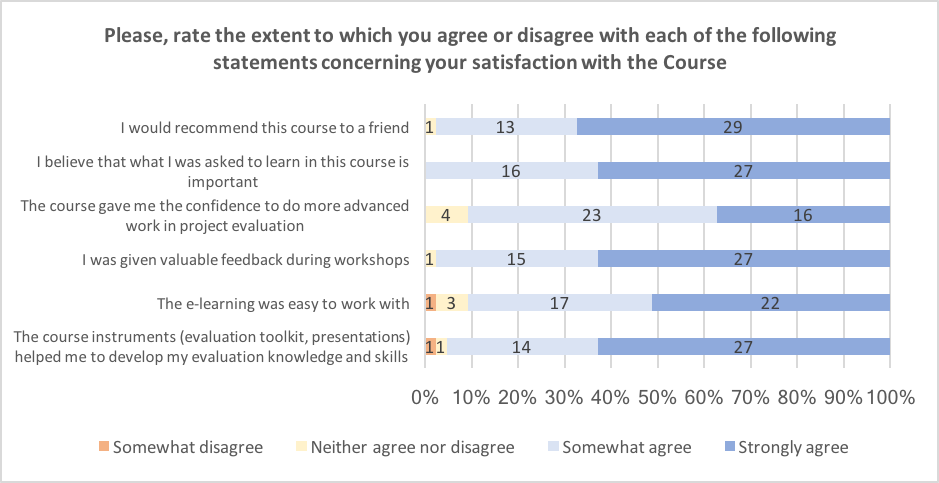

The participants seemed to be quite satisfied with the course. Except few ambivalent reactions and two slightly negative answers in case of e-learning and the course instruments, the overwhelming majority of participants either somewhat or strongly agreed with particular statements concerning their satisfaction with the course.

At the end of the ex-post survey, participants were asked to write some feedback on the course (“Please, sum up in several words your experience with the course. We also appreciate any feedback that would help us to improve the course in the future”). Although the question was not mandatory, participants left more than five full pages of positive feedback and words of appreciation. Most frequently, the participants valued informational wealth, good organization and friendly spirit. One of the recurring topics was the connection between theory and practice. Participants often had some theoretical background in evaluation but consciously lacked better understanding how to use the theoretical knowledge in practice. According to participants, the course helped them in this. One of the Polish participants summed up their experience in the following words.

“The course was organized and conducted at the highest level. The commitment shown by the lecturers encouraged the participants to further deepen the secrets of evaluation. Their genuine sincerity and willingness to help during the analysis of the tools prepared by the participants made them want to take part in such a course again. The period of the pandemic and remote learning do not help with learning such a difficult and complicated material as evaluation, which is why the words of appreciation for the ability to transfer knowledge by these two lecturers are even more desirable. Thanks to this, they managed to build such closeness with the participants despite the distance.”

Besides the words of praise, there were a few suggestions of improvement as well. One participant noted that some advanced materials were in English mostly, whether by omission or intention, and it was more difficult for a beginner to navigate through these parts. Other participant suggested demonstrating the methods in one case from the beginning to the end. One of the participants admitted that the distant form of workshops was more challenging than face-to-face form because it’s more difficult to focus. However, others appreciated how well organized and lectured the workshops were despitethe online form. Another participant would appreciate more workshops but shorter ones.

The implementation team also collected feedback on the e-learning course and on the toolkit. The questions were:

- What are your feelings after you read the toolkit? / What are your feelings about the course?

- Are there any elements of the toolkit/course you find useful? Which?

- Are there elements which need to be better explained? Which?

- Is there anything else that could be improved in the toolkit/course? What?

- Do you feel that anything is missing in the toolkit/course? Please specify.

Toolkit

The feedback on the toolkit was overall positive and it was considered everything was comprehensible and clear. Especially appreciated were the parts of the toolkit concerning evaluation methods, data collection, how to ask evaluation questions or how to use the theory of change as a basis for the next steps in evaluation. As for improvement, it would be appreciated to use more practical examples, especially when dealing with evaluation methods or when focused on dealing with youth as a specific target group. And for the less advanced, the terminology might deserve more explanation.

Course

Opinions on the course were all positive. They appreciated that the course was comprehensible, clear, related to the toolkit and the tests helped to revise the content of the modules. As particularly useful elements of the course were considered tools and methods (especially the summary of the methods), videos, homework commented by the lecturers during the workshops, downloadable PDF files or the part on the data collection. Again, more examples would be appreciated. And the volume of knowledge was considered sufficient to be disseminated on at least one more workshop.

[1]More in ADDENDUM NUMBER 1 of the project Youth Impact, index number 2017-1-415.